Key takeaways:

- Performance testing is essential for ensuring website reliability and user satisfaction, as it helps identify weaknesses and optimize load times.

- Key metrics for performance testing include response time, throughput, and error rates, which provide insights into user experience and system performance.

- Early and continuous testing, combined with collaboration among team members, enhances the effectiveness of performance strategies and prevents critical issues.

Author: Charlotte Everly

Bio: Charlotte Everly is an accomplished author known for her evocative storytelling and richly drawn characters. With a background in literature and creative writing, she weaves tales that explore the complexities of human relationships and the beauty of everyday life. Charlotte’s debut novel was met with critical acclaim, earning her a dedicated readership and multiple awards. When she isn’t penning her next bestseller, she enjoys hiking in the mountains and sipping coffee at her local café. She resides in Seattle with her two rescue dogs, Bella and Max.

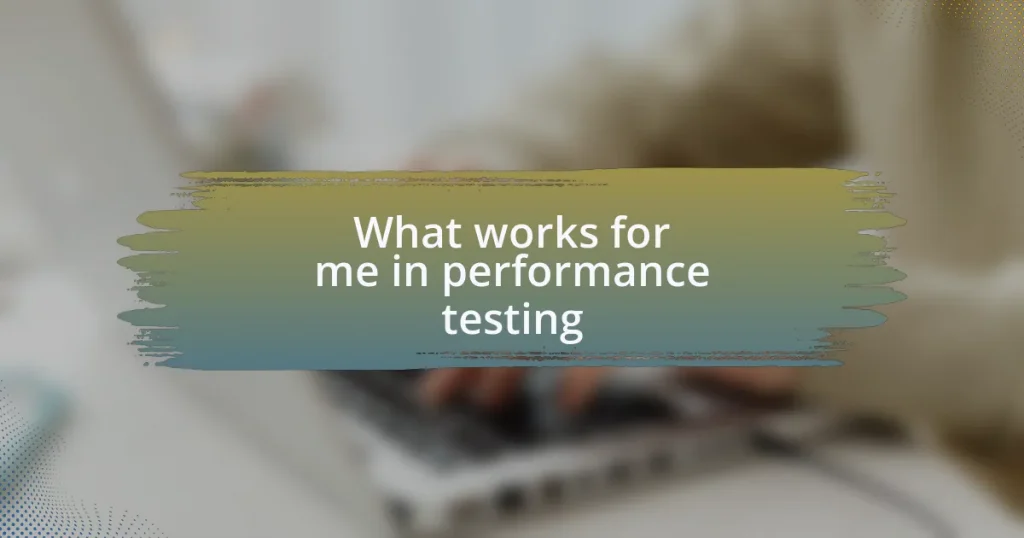

Understanding performance testing

Performance testing is crucial for ensuring that a website can handle varying loads and traffic. I remember the first time I witnessed a site crash under unexpected user demand; it was a learning moment that deepened my appreciation for this testing. Can you imagine the frustration users feel when a site they love suddenly fails to load because it wasn’t prepared for spikes in traffic?

When I perform performance testing, I focus on various factors, such as load time, responsiveness, and stability. Each of these elements contributes to user satisfaction, and seeing a website thrive under pressure always gives me a sense of achievement. It’s fascinating how just a few tweaks can enhance performance and transform a user’s experience.

The tools and methods used in performance testing range from simple load testing scripts to sophisticated monitoring software. In my experience, the right tool can make all the difference in pinpointing bottlenecks. It’s empowering to see tangible improvements and witness firsthand how optimal performance directly impacts user engagement and retention. Have you ever tested your website’s performance? The insights from those results can be eye-opening!

Importance of performance testing

The importance of performance testing cannot be overstated. I’ve seen firsthand how a sluggish website can drive potential customers away. It’s disheartening to think about the lost opportunities because a site wasn’t optimized for speed. Have you ever abandoned a web page because it took too long to load? It’s a powerful reminder that in a fast-paced digital world, performance can make or break user experience.

When a website performs well, users are more likely to engage, return, and even recommend it. In my experience, after implementing performance enhancements, the positive feedback from users never ceases to amaze me. It’s a simple yet profound satisfaction to know that my efforts led to faster load times and happier visitors. Wouldn’t you agree that a seamless experience fosters loyalty?

Moreover, performance testing helps identify weaknesses before they become critical issues. I recall a project where a minor coding flaw resulted in severe delays during peak traffic. Running comprehensive tests allowed us to resolve this before the launch, preventing a potential public relations headache. This proactive approach not only safeguards the site’s reputation but also builds trust with users, who want reliability in their online interactions.

Common performance testing tools

There are several performance testing tools that have made my work significantly easier. For instance, I often rely on Apache JMeter for load testing, which simulates multiple users accessing a web application simultaneously. I remember using it on a particularly demanding project; the insights it provided about server response times were invaluable, guiding us to optimize our backend effectively.

Another tool that stands out is LoadRunner. What I appreciate about LoadRunner is its ability to handle large-scale testing and integrate with other systems. In one of my experiences, it allowed us to stress test a critical application, shedding light on bottlenecks that we could never have identified otherwise. It’s a powerful reminder of how essential it is to test under real-world conditions rather than just assumptions.

Then there’s GTmetrix, which focuses on page speed analysis. I was pleasantly surprised by how user-friendly it is, helping both developers and non-technical stakeholders understand performance metrics. Seeing the before-and-after results of optimizations can be incredibly rewarding. Have you had a chance to use a tool that revealed unexpected insights? Those moments can redefine how we approach performance and user satisfaction.

Key metrics for performance testing

When it comes to performance testing, I’ve learned that focusing on the right key metrics can make all the difference. One critical metric is response time, which measures how quickly a server responds to user requests. I recall a time when a minor delay in response time led to a significant drop in user engagement—a stark reminder that even small lags can have a big impact on user experience.

Another metric I prioritize is throughput, which indicates how many requests a server can handle within a specific time frame. During one of my performance testing phases, I monitored throughput closely, and it revealed some surprising trends. The data showed that our server could manage a high volume of requests, but we hadn’t optimized our database queries, which ultimately limited our potential. Have you ever found a surprising bottleneck when analyzing throughput?

Lastly, error rates are a crucial metric that I never overlook. They quantify the number of failed requests during testing, helping to pinpoint where issues arise. In my experience, I once encountered a high error rate due to a third-party API failing under load. This incident reinforced the importance of testing not only our applications but also how they interact with external systems. Have you had similar challenges that changed your approach to performance testing?

My personal performance testing strategies

When it comes to my personal performance testing strategies, I always start with load testing. This approach allows me to simulate users hitting the site to see how it performs under pressure. I vividly remember one project where the site became unresponsive during a simulated high-load test. That moment was a wake-up call, reminding me how critical it is to anticipate user spikes.

I also make it a habit to conduct stress testing, which goes beyond regular load testing. This strategy helps me determine how far I can push the application before it breaks. There was a time when I subjected an app to intense stress, and I found its breaking point much sooner than I had anticipated. It was frustrating, but that knowledge empowered me to build in extra capacity and prevent potential downtime.

Analyzing results is another key strategy I rely on. After running various tests, I dive deep into the data to identify patterns and anomalies. I recall a particular instance where metrics suggested everything was running smoothly, but I noticed subtle spikes in error rates during peak times. This taught me the importance of not just looking at the numbers but understanding their context, leading to more informed decisions down the line. Have you ever felt misled by seemingly favorable data?

Lessons learned from performance testing

Performance testing has taught me that anticipation is crucial. I remember working on a web app during a seasonal sale, only to find the initial testing phase led to miscalculations about expected traffic. Once the sale went live, the site lagged significantly. This taught me that understanding user behavior is just as important as the metrics we track.

Another lesson that stands out is how essential it is to keep communication open with the development team. In one project, we missed a crucial bug because we hadn’t shared the test results promptly. This misalignment not only caused delays but also hurt our launch timeline. It made me realize that a collaborative approach can significantly enhance our overall performance strategy.

I often reflect on the importance of continuous testing. After implementing a new feature, I ran performance tests and discovered unexpected slowdowns. It made me think: How many times do we overlook post-deployment testing? This experience reinforced the idea that performance cannot be an afterthought; instead, it should be woven into the fabric of the development cycle.

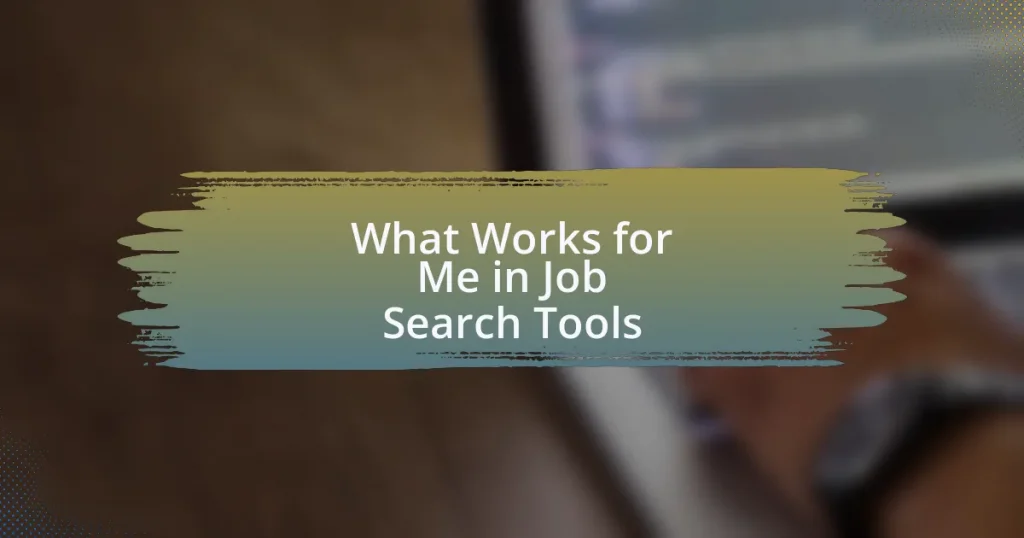

Tips for effective performance testing

When it comes to effective performance testing, I’ve found that starting early in the development process is key. I remember a project where we didn’t initiate testing until the final stages. The friction that arose from realizing how many adjustments we needed was palpable. If I had acted sooner, we could have addressed issues progressively rather than scrambling at the end. Isn’t it fascinating how prioritizing early testing can save not just time but also stress down the line?

Another insight I’ve gained is the power of using real-world scenarios in testing. During one of my early projects, we relied solely on automated scripts and missed critical user interactions—like how slow loading times affected navigation. It struck me that simulating actual user behavior during tests brings invaluable context to the results. Don’t you think it’s essential to test not just how fast the site loads but also how it feels to a user?

Lastly, I can’t stress enough the benefit of analyzing results with a fresh pair of eyes. After running extensive tests, I’ve often taken a step back and invited team members to weigh in. Their fresh perspectives have uncovered flaws I missed, and it transformed what could have been a mundane review into a productive brainstorming session. Isn’t collaboration like that a reminder of how teamwork amplifies our strengths?